Let's Talk about Map

As a Java Developer, the word Map is definitely not unfamiliar. Whether it’s in the development process or going out for an interview, we often encounter it. The most frequently used and interview questions are nothing more than these few, HashMap, HashTable, ConcurrentHashMap. So this article will summarize these points.

Starting with HashMap

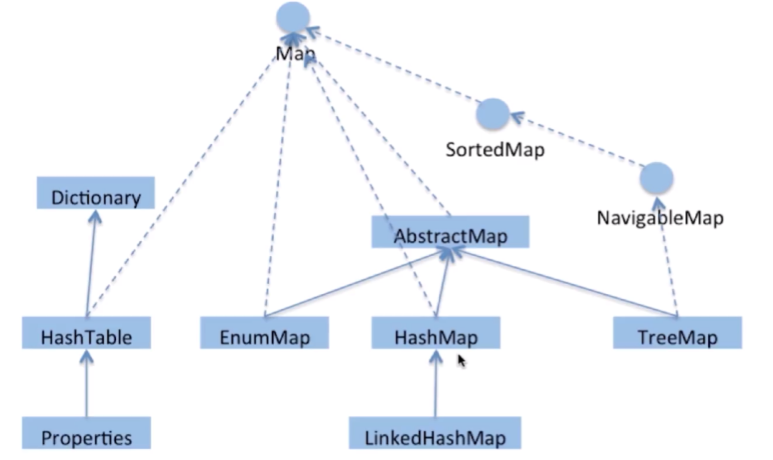

HashMap is the most frequently used of the several Maps mentioned above, after all, the scenarios that need to consider multithreading concurrency are not too many. Below is a relationship diagram of Map, you can understand it.

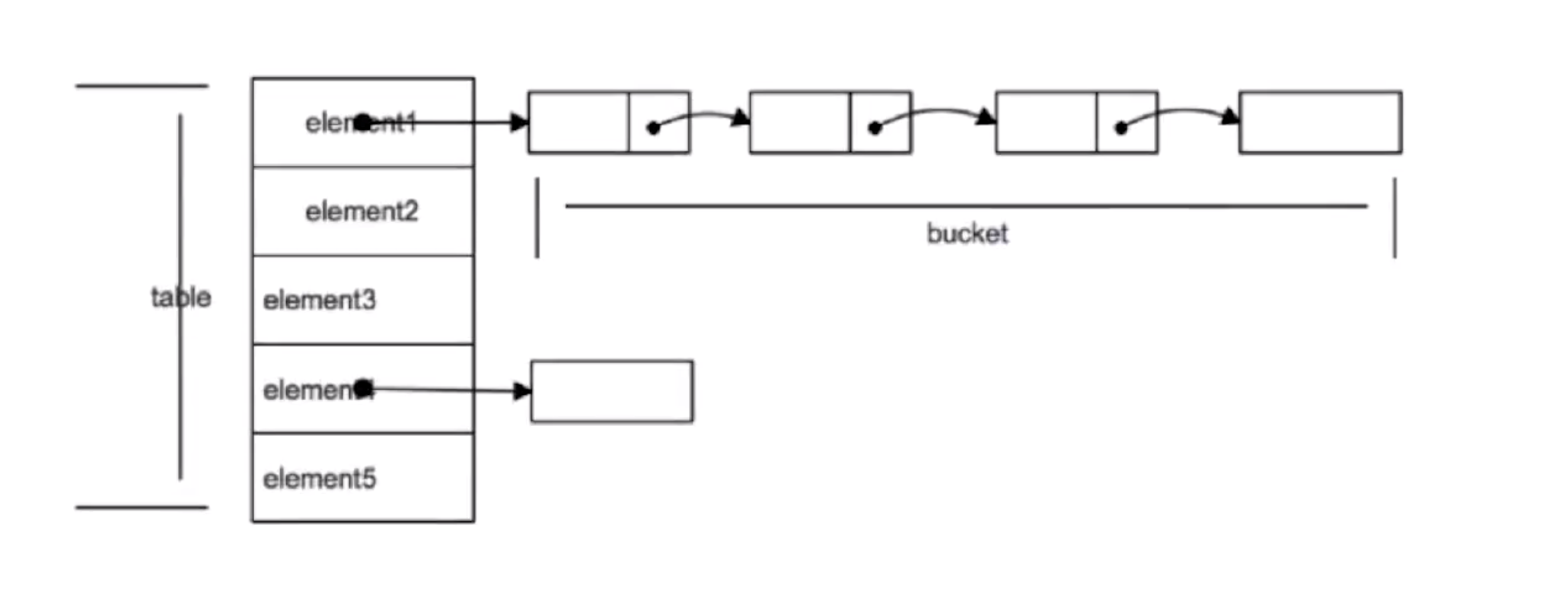

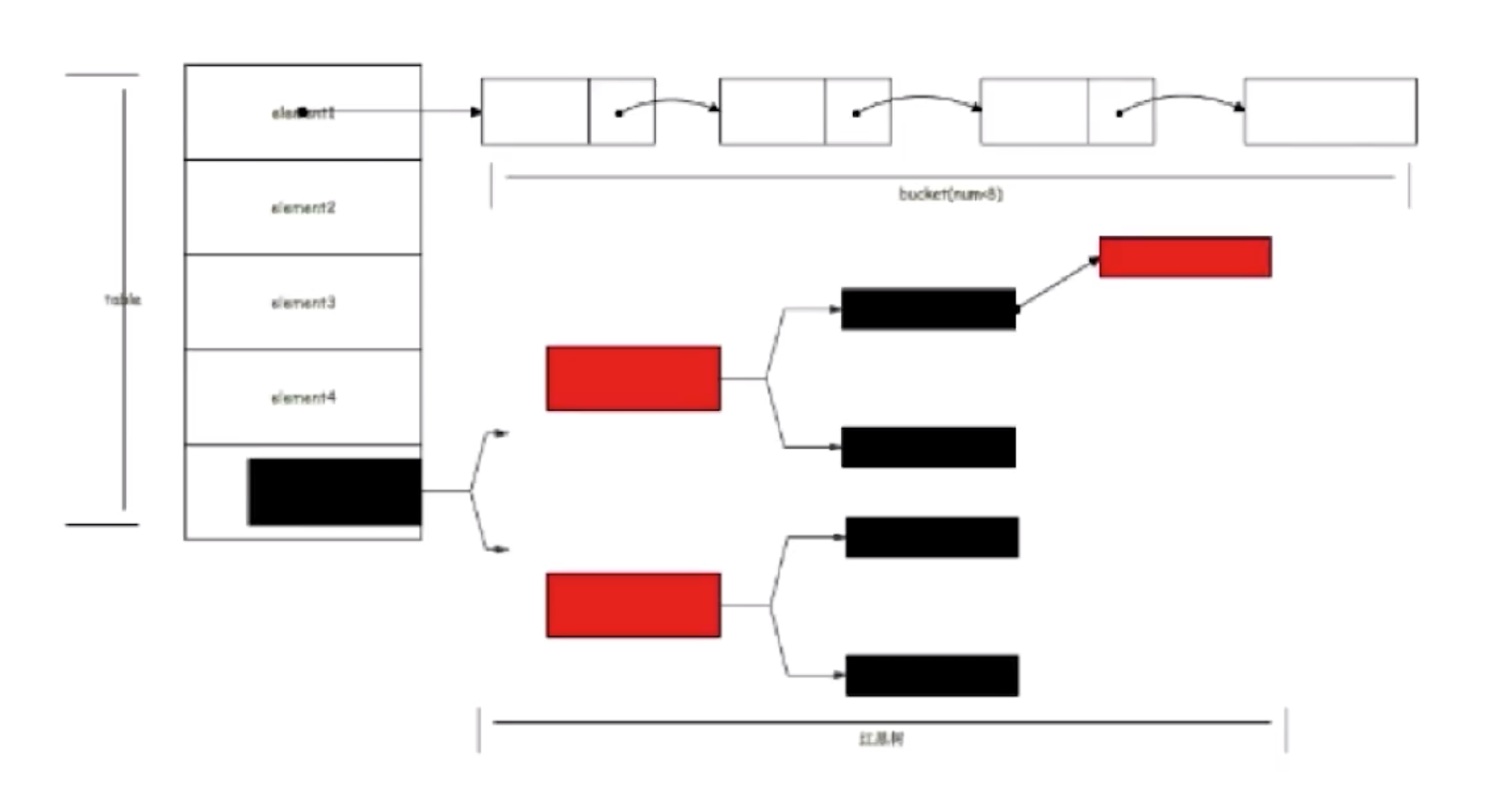

HashMap has a big difference before and after Java8. Before Java8, its data structure was in the form of an array + linked list. After 8, it became an array + linked list + red-black tree structure. Its key is saved in a Set, which has the function of deduplication, and values exist in a Collections.

The elements stored in the array of HashMap are instances in the form of key-value. In Java7, it is called Entry, and in 8 it is called Node. This Node contains several attributes such as hash value, key-value pair, next node next. The array is divided into buckets, that is, buckets, and the key-value pair is located in this array through the hash value. If the hash values are the same, they are stored in the form of a linked list. If the length of the linked list exceeds the threshold, it is converted into a red-black tree. Let’s take a look at the put operation of HashMap.

|

|

Then the summary is:

- If the HashMap is not initialized, initialize the operation

- Hash the Key and calculate the subscript based on the Hash value

- If there is no collision, put it into the bucket directly

- If there is a collision, link to the back as a chain table

- If the length of the chain table exceeds the threshold, and the HashMap element exceeds the minimum tree capacity, then turn the chain table into a red-black tree

- If the node already exists, replace the old value with the new value

- If the bucket is full, you need to resize (reorder after expanding the capacity by a factor of 2)

This put operation leads to several knowledge points. First, What is the initial capacity of the HashMap? Why is it set to this value? Looking at the source code, we can see that there is a variable DEFAULT_INITIAL_CAPACITY.

|

|

This is the initial capacity of the HashMap, that is, 16, why use the bit operation so testy to write because the bit operation is more efficient than the arithmetic calculation. Look at the formula for calculating the subscript above: index = HashCode (Key) & (Length- 1), when the length is 16, Length-1 binary is 1111, is a number of all bits are 1, and look at the above note, the initial length of the proposed HashMap is a power of 2, in this case, the result of index is equivalent to the HashCode. The result of index is equivalent to the value of the last few bits of HashCode. Then as long as the input HashCode itself is evenly distributed, the result of the Hash algorithm is even.

Another question, Java8 introduced the red-black tree, when the chain table reaches a certain length will be converted to red-black tree, what is the benefit of introducing red-black tree? What is the threshold value of this transformation and why is it this value?

When the elements put, the first thing is to calculate the subscript according to the hash function and length, but even if the hash function is good, it is difficult to achieve 100% uniform distribution of elements, then it may lead to a large number of elements in the HashMap are stored in the same bucket, there is a long chain table under this bucket, this time the HashMap is equivalent to a single chain table, if the single chain table has n If a single linked table has n elements, the time complexity of traversal is O(n), which completely loses its advantage.

After the introduction of the red-black tree, but the length of the chain table is greater than 8, it will be converted into a red-black tree, if the number of elements of the chain table is less than or equal to 6, the tree structure reverts to a chain table. As for why 8, I have seen two statements, one is because the average lookup length of the red-black tree is log(n), when the length is 8, the average lookup length is 3, if you continue to use the chain table, the average lookup length is 8/2 = 4, which is necessary to convert to a tree. If the length of the chain table is less than or equal to 6, 6/2 = 3, although the speed is also very fast, but the time to convert to a tree structure and generate a tree is not too short. Another argument is that according to Poisson distribution, when the default load factor is 0.75, the probability that the number of elements in a single hash slot is 8 is less than one in a million, so 7 is used as a watershed, equal to 7 when not converted, greater than or equal to 8 is converted, less than or equal to 6 is transformed into a linked table. Both make sense I think either one is fine.

When the bucket is full, the HashMap will be expanded resize, when and how it is expanded?

When the capacity of the bucket reaches the length multiplied by the load factor, it will be expanded, and the default load factor is 0.75.

|

|

First, it creates a new empty Entry array that is twice the length of the original array. Then it iterates through the original Entry array and ReHash all the Entries to the new array. The reason for ReHash here is that we know that the subscript calculation is related to the length, the length is not the same, then the index calculation results are naturally different, so you need to re-hash to the new array, rehash is a more time-consuming process.

Next or insertion-related issues, the new Entry node in the insertion of the chain table, how is inserted?

Before Java8, it was a header insertion method, that is, the new value would replace the original value, and the original value would be pushed down the chain, just like the example above, because the author who wrote the code thought that the later value would be more likely to be looked up to improve the efficiency of the lookup, and after Java8, it was used The tail is inserted. Since there will be conditional competition when expanding, if both threads find that the HashMap needs to be resized, they will try to resize it at the same time. If you use the head insertion method, suppose the original chain is A pointing to B pointing to C, the new chain may appear B pointing to A but A also pointing to B. If you use the tail insertion method to expand the capacity to keep the order of the chain elements, there will be no such problem of the chain becoming a loop.

When putting, we will first determine whether there is a collision, so how to reduce the collision?

Generally there are two methods, one is to use the perturbation function, so that different objects return different hashcode; one is to use the final object to prevent the key value change, and use the appropriate equals method and hashCode method to reduce the occurrence of collisions.

Then for the get method because it is relatively simple not to do too much detailed explanation, in fact, is based on the key hashcode to calculate the subscript of the element in the array, after traversing the Entry object chain, until the element is found.

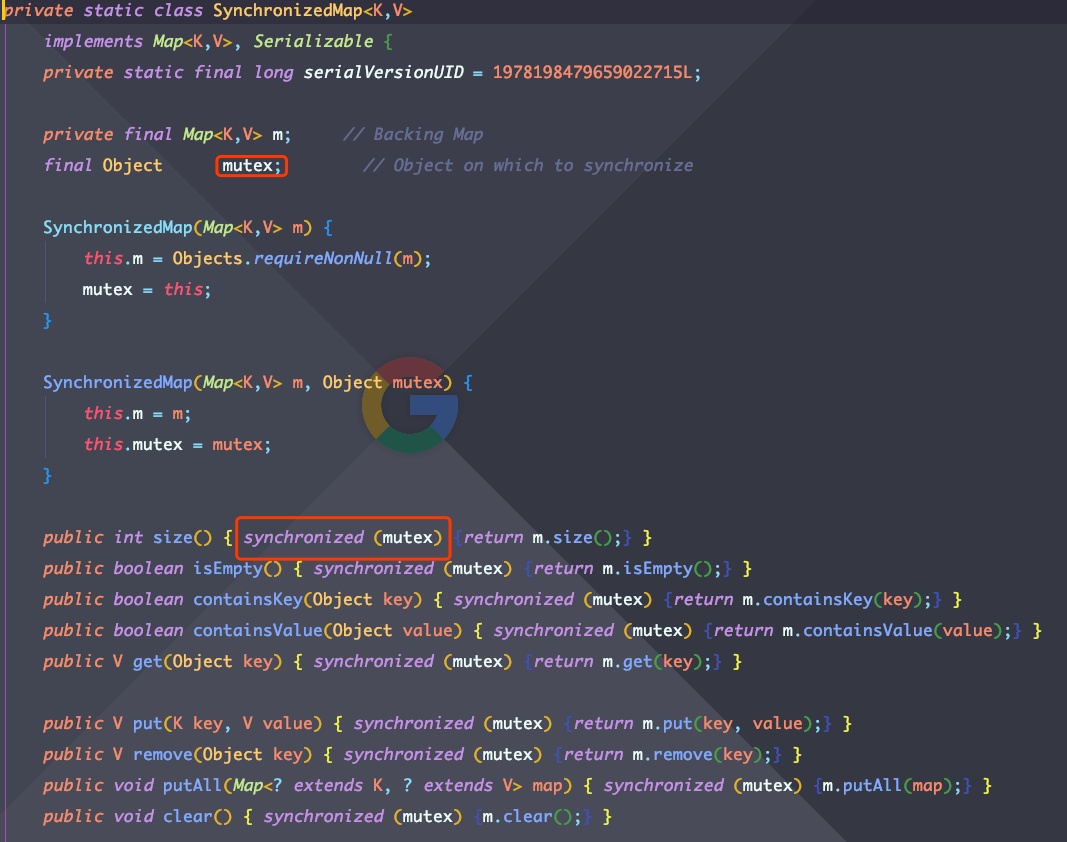

SynchronizedMap

Here additionally mention a Map, is also a solution to the HashMap multi-threaded safety program. That is Collections.synchronizedMap(Map). It will return a thread-safe SynchronizedMap instance. It maintains an exclusion lock mutex inside. for the public method inside, synchronized is used to lock the mutex. Serialized execution in a multi-threaded environment is inefficient.

The above are some simple knowledge points about HashMap, I have organized here is not too much but still very practical (I know so much).

The above are some simple knowledge points about HashMap, I have organized here is not too much but still very practical (I know so much).

HashTable

About HashTable actually can not say too much, because to be honest, anyway, I have never used. All know that it is thread-safe, but it uses a very simple and brutal means. The place involved in the modification of the use of synchronized modified, to serialize the way to run, the efficiency is relatively low. It is very close to the above mentioned SynchronizedMap to achieve thread-safe way, but the lock object is not the same.

ConcurrentHashMap

So let’s talk about another quite common ConcurrentHashMap, its current data structure and the original is also different, the early is also an array + chain, now is an array + chain + red-black tree.

Before Java8, consisting of Segment array, HashEntry, through the segment lock Segment to achieve thread safety, ConcurrentHashMap internal maintenance Segment internal class, inherited ReentrantLock. it will lock a segment of the storage, to each segment of data to assign a lock, that is It is theoretically 16 times more efficient than HashTable. The HashEntry is similar to the HashMap, except that it uses volatile to modify the value of the data and the next node next.

To Java8, it is no longer using Segment segment lock, but the use of CAS + synchronized to ensure thread safety. synchronized locks the first node of the current linked table or red-black tree, so that there are no concurrency problems as long as the hashes do not conflict.

|

|

ConcurrentHashMap and HashMap have similar parameters, but some are unique, such as sizeCtl. It is a control bit identifier when the hash table is initialized or expanded, and a negative number means it is being initialized or expanded. Similarly, let’s look at its put operation.

|

|

The above is an explanation of the entire code, summarized in the following steps:

- Determine whether the Node[] array is initialized, if not, then initialize the operation

- Locate the index coordinates of the array by hash, whether there is a Node node, if not, use CAS to add (the head node of the chain), add failure to enter the next cycle

- Check that the internal capacity is being expanded, and help it to expand in one piece

- If the head node f!=null, then use synchronized to lock the f element (head element of the chain table/red-black binary tree)

- If it is a Node (chain table structure), then add the chain table operation

- If it is a TreeNode structure, then perform the tree add operation

- Determine the length of the chain table has reached the threshold value of 8, this 8 can be adjusted, when the number of nodes exceeds this value will convert the chain table into a tree structure

Using this method, the lock is split more finely compared to Segment. First insert the node using the lock-free operation CAS, and retry in a loop if it fails. If the head node exists, then try to get the synchronous lock of the head node before the operation. As for the get operation, it is also based on the hashcode addressing, if it is on the bucket, it will return the value directly, if not, it will traverse the value according to the chain table or red-black tree.

The difference between HashMap, HashTable and ConcurrentHashMap

Roughly told the basics of their three, then to summarize their differences. Here is a list you can see.

- HashMap thread insecure, array + chain table + red-black tree

- HashTable thread-safe, lock the entire object, array + chain table

- ConcurrentHashMap thread-safe, CAS + synchronous lock, array + chain + red-black tree

- HashMap key, value can be null, the other two can not

- HashTable uses a fail-safe mechanism, which makes the data you read this time may not be the latest data. If you use a null value, it will make it impossible to determine whether the corresponding key does not exist or is empty, because you cannot call contain(key) again to determine whether the key exists, the same for ConcurrentHashMap

- The initial capacity of HashMap is: 16, and the initial capacity of HashTable is: 11. The default load factor of both is: 0.75.

- When the existing capacity is larger than the total capacity * load factor, the expansion rule of HashMap is double the current capacity, and the expansion rule of HashTable is double the current capacity + 1.

- The Iterator iterator in HashMap is fail-fast, while HashTable’s Enumerator is not fail-fast.

- Fail-fast is a mechanism in the java collection, when iterator iterator over a collection object, if the contents of the collection object is modified (added, deleted, modified) during the iteration process, then a Concurrent Modification Exception will be thrown.

The above is about Map-related knowledge points. Thanks for reading !