Brief Discussion on Distributed Transactions

In this article, we will focus on some relevant knowledge points about distributed transactions. This is an indispensable technology for learning distributed systems. The most common case is the bank transfer problem. Account A transfers 100 yuan to account B. Then the balance of account A should decrease by 100, and account B should increase by 100. The two steps must both succeed to be considered successful. If only one succeeds, it should be rolled back. If A and B are not in the same environment or system, then this transaction is a distributed transaction. So in this case, how to ensure the correct execution of the transaction and what execution plans are there?

CAP Theory and BASE Theory

At the beginning of distributed transactions, let’s start with some basic knowledge points in distributed systems. First is the famous CAP theory. The three letters of CAP represent three words: Consistency, Availability, Partition Tolerance. The CAP theory states that any distributed system can guarantee at most two of the three CAP properties.

First, consistency means that all data on the data nodes is consistent and correct. Availability means that each operation can always return a result within a certain time, that is, each read and write operation is successful. What we often see in some descriptions of system stability is reaching several 9s, such as 3 9s meaning 99.9%, 4 9s meaning 99.99%. This means the system will only fail or error in very few cases. Partition tolerance means the distributed system can still operate normally when some nodes fail.

Common distributed system application architectures are either AP or CP, because without P it is essentially a standalone application that is not distributed. The most commonly used registry centers in work, ZooKeeper is based on CP architecture, Eureka is based on AP.

In engineering practice, BASE theory evolved from CAP theory. Base stands for three words: Basically Available, Soft State, and Eventally Consistent. Its core idea is eventual consistency, that is, strong consistency cannot be achieved, but we can make the system eventually consistent according to the actual business situation.

First, basic availability in Base theory means not pursuing successful read and write operations at any time in CAP, but the system can ensure basic operation. For example, we may experience queuing or failure during Double Eleven, which actually sacrifices some availability to ensure system stability.

Soft state can correspond to the strong consistency in ACID. ACID requires either full execution or no execution at all, and all data seen by users is absolutely consistent. But soft state allows moments of data inconsistency, which is an intermediate state where data on several data nodes is delayed.

Eventual consistency means that the data cannot remain in a soft state indefinitely, and ultimately the data on all nodes must be consistent. This is also what we pursue most during development.

Data Consistency Model

Data consistency models are generally divided into weak consistency and strong consistency. The above BASE theory achieves eventual consistency which is actually weak consistency, while strong consistency is sometimes called linear consistency, which means that after each update operation, the data obtained by each other process is the newest. This way is user-friendly, that is, what operations the user has done before, the next step can ensure what to get. But this approach sacrifices system availability, which can be understood as achieving CP by sacrificing A.

Except for strong consistency, other consistency models are weak consistency, that is, the system does not promise that the latest data can be read after the update operation. To correctly read this data, you need to wait for a period of time, this time difference is called the “inconsistency window”.

Eventual consistency is a special case of weak consistency. It emphasizes that replicas of data on all nodes will become completely consistent after a period of time. Its inconsistency window time is mainly affected by communication delay, system load and the number of replicated copies. According to different guarantees, it can also be divided into different models, including causal consistency and session consistency.

Causal consistency requires the order of causally related operations to be guaranteed, while the order of non-causally related operations does not matter.

Session consistency frames the process of accessing system data within a session. It stipulates that the system can guarantee “read your own writes” consistency within the same valid session, that is, you can always read the latest value of the data item in your access session after executing the update operation.

So in order to achieve data consistency, we naturally have to go back to the concept of distributed transactions that we wanted to talk about at the beginning. It is different from the services on our single machine applications. Because the data is distributed across multiple servers, we cannot use traditional ways to ensure correct transaction submission, which leads to solutions specifically for distributed transactions.

2PC Two-Phase Commit

Two-phase commit (2PC) is a very classic strong consistency, centralized atomic commit protocol. This algorithm contains two types of roles, the coordinator and the participants. The so-called two phases refer to the prepare phase (Commit-request) and the execute phase (Commit).

During the prepare phase, the coordinator notifies each participant to prepare to commit the transaction and asks if they accept it. The participants will each feedback their own responses, agreeing or canceling (failure). In this phase, none of the participants have committed the transaction operation.

After the coordinator receives the feedback from the participants, it makes a decision. If all participants agree, then the transaction is committed, otherwise it is canceled. At this time, notify all participants to commit/cancel the transaction. After receiving the coordinator’s notification, the participants accept the transaction operation commit/rollback.

However, two-phase commit has some problems, listed as follows:

- Resources are synchronously blocked: during execution, all participants are exclusively occupied by the transaction, which means that when participants occupy public resources, other nodes accessing public resources will be blocked.

- The coordinator may fail as a single point: as the core role in this algorithm, once the coordinator fails, the participants will be blocked. If it happens in the second phase, all participants are still in the state of locking transaction resources, but cannot receive the coordinator’s decision notification, resulting in locking and inability to complete the transaction.

- Data inconsistency occurs in the commit phase: in the second phase, the coordinator initiated the commit notification, but due to network and other reasons, some nodes received and some nodes did not receive the notification, which led to those that did not receive still being blocked, and those that received would commit, thus causing data inconsistency.

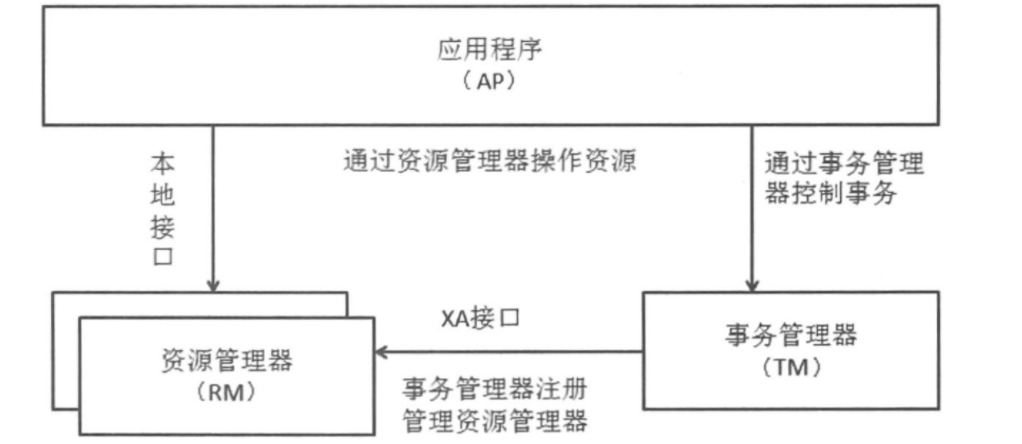

XA Standard

Having talked about two-phase commit, here we might as well mention the XA standard that we often say when asked about MySQL, because it actually implements two-phase commit. It is a distributed transaction specification proposed by the X/Open organization. The XA specification mainly defines the interface between the transaction manager and the resource manager.

The transaction manager serves as the coordinator and is responsible for coordinating and managing transactions, providing application programming interfaces to AP applications, and managing resource managers. The transaction manager assigns transaction identifiers to transactions, monitors their progress, and is responsible for handling transaction completion and failure. Resource managers can be understood as a DBMS system or message server management system. The application accesses the resource through the resource manager for control. The resource must implement the interface defined by XA. The resource manager provides support for storing shared resources.

At present, mainstream databases provide support for XA. In the JMS specification, that is, the Java Message Service, transaction support is also defined based on XA.

According to the 2PC specification, XA also divides transactions into two steps: prepare and commit. The prepare phase is the TM sending the Prepare command to the RM to prepare for submission. The RM executes the data operation and returns the result to the TM. According to the results received, the TM enters the commit phase and notifies the RM to perform the Commit or Rollback operation.

In MySQL there are two types of XA transactions, internal XA and external XA.

In MySQL’s InnoDB storage engine, with binlog enabled, MySQL maintains both the binlog log and InnoDB’s redo log to ensure consistency between the two logs. MySQL uses XA transactions, since it works on a single MySQL instance, it is called internal XA. The internal XA transaction uses binlog as the coordinator. When committing a transaction, the commit information needs to be written to the binary log, that is, the participant of binlog is MySQL itself.

External XA is a typical distributed transaction. MySQL supports XA SQL statements such as START/END/PREPARE/Commit, and distributed transactions can be completed using these commands.

3PC Three-Phase Commit

Three-phase commit (3PC) is an improved version based on 2PC with two changes:

- Introduce timeout mechanism, and add timeout mechanism to both coordinator and participants.

- Split the first phase of 2PC into two steps: ask, then lock resources, and finally really commit.

Its three phases are called Can Commit, PreCommit and Do Commit.

CanCommit Phase

Very similar to the prepare phase of 2PC, the coordinator initiates a CanCommit request to the participants, and the participants return yes or no.

PreCommit Phase

The coordinator decides whether to continue the transaction PreCommit operation based on the reaction of the participants. According to the response, there are two possibilities:

- All return ok — If all return OK, then pre-commit the transaction. The coordinator sends a PreCommit request to the participants. After receiving it, the participants perform the transaction operation but do not commit. If executed successfully, return ACK response.

- Some return ok — If some return OK, it may indicate that some participants returned NO or timed out after the coordinator still did not receive feedback from the participants. At this time, interrupt the transaction, the coordinator sends an interrupt request to the participants, and the participants abort after receiving it.

DoCommit Phase

This phase performs the actual submission, similar to the final phase of 2PC. If the coordinator received all ACKs in the previous step, it will notify the participants to commit and the participants will commit after receiving and feedback ACK to the coordinator. But if the coordinator did not receive the ACK response in the previous step, the transaction should also be interrupted. If the timeout is exceeded and the participant does not receive the coordinator’s notification, it will automatically commit.

Obviously, 3PC, because both the coordinator and participants have timeout mechanisms (2PC only has the coordinator), can ensure that resources are not locked due to coordinator failure, and the addition of a PreCommit phase makes the state before participant submission consistent. But this does not solve the possible consistency problem, because in the DoCommit phase described above, if the participant does not receive the coordinator’s notification and reaches the timeout, it will still commit, resulting in data inconsistency.

TCC (Try-Confirm-Cancel)

The concept of TCC (Try-Confirm-Cancel) comes from a paper titled “Life beyond Distributed Transactions: an Apostate’s Opinion” published by Pat Helland. Its three letters also correspond to three phases:

- Try phase: call the Try interface to try to execute the business, complete the business check, and reserve business resources.

- Confirm phase: confirm the execution of the business operation, do not perform business checks, only use the business resources reserved in the Try phase.

- Cancel phase: mutually exclusive with Confirm, only one of the two can enter. During business execution errors, perform rollback, execute business cancellation, and release resources.

Failure in the Try phase can be canceled, but there is no Confirm/Cancel phase. To solve this problem, TCC adds transaction logs, allowing retries if the Confirm or Cancel phase fails. Therefore, these two interfaces need to support idempotency. If retries still fail, manual intervention is required.

Characteristics of TCC

From the above description, it is obvious that compared with previous methods such as 2PC, TCC focuses on the business layer rather than the database or storage resource layer. Its core idea is that for each business operation, corresponding confirmation and compensation operations must be added, and the related processing is moved from the database to the business layer, so as to achieve cross-database transactions.

But precisely because it handles transactions at the business layer, it is highly intrusive to microservices. Each branch of the business logic needs to implement Try, Confirm, and Cancel operations, and Confirm and Cancel also need to implement idempotency. In addition, the TCC transaction manager needs to record transaction logs, which also consumes some performance.

Introducing TCC in business generally relies on a separate TCC transaction framework, the most common of which is the Seata framework, which you can try using yourself.

Eventual Consistency Based on Message Compensation

In actual production work, we often use a scheme based on message compensation. It is an asynchronous transaction mechanism. Common implementation schemes include local message tables and message queues.

Local Message Table

First talk about the local message table. It was originally proposed by eBay engineers. The core idea is to split distributed transactions into local transactions for processing, and execute them asynchronously through message logs. So it actually takes advantage of the local transactions of each system to achieve distributed transactions. A message table needs to be created locally. When executing business, a message must also be stored in this message table so that successful storage of messages and business data can be guaranteed at the same time. After successful storage, subsequent business operations are performed. If successful, the message status is updated to successful. If it fails, there will be a timed task that constantly scans unfinished tasks for execution. Failure is also retryable, so business handling interfaces need to be idempotent.

So it can be seen that the local message table method can ensure eventual consistency, but there may be data inconsistencies for some time.

Reliable Message Queue

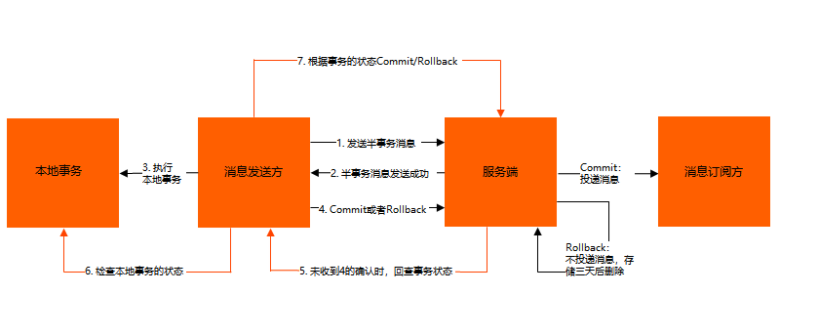

RocketMQ, one of the common message queues we know, supports message transactions. So I will talk about RocketMQ implementing distributed transactions here. I got this part from Alibaba’s documentation. If interested, you can check it out yourself. It shows some concepts:

- Half-transaction message: a message that cannot be delivered temporarily. The sender has successfully sent the message to the RocketMQ server, but the server has not received the secondary confirmation from the producer. At this time, the message is marked as “temporarily undeliverable”.

- Message checkback: Due to network disconnection, producer application restart and other reasons, a certain transaction message loses its secondary confirmation, and the RocketMQ server actively inquires the final status (Commit or Rollback) of the message from the producer when scanning and finding a message that has been in “half transaction message” for a long time. This inquiry process is message checkback.

The interaction process is as follows:

The steps for sending transactional messages are as follows:

- The sender sends the half-transaction message to the RocketMQ server.

- After the RocketMQ server persists the message successfully, it returns Ack to the sender confirming that the message has been sent successfully. At this time, the message is a half-transaction message.

- The sender starts executing local transaction logic.

- According to the result of the local transaction execution, the sender submits secondary confirmation (Commit or Rollback) to the server. After receiving the Commit status, the server marks the half-transaction message as deliverable, and the subscriber will eventually receive the message; after receiving the Rollback status, the server deletes the half-transaction message and the subscriber will not receive the message.

The transaction message checkback steps are as follows:

- In special cases such as network disconnection or application restart, the secondary confirmation in step 4 above eventually fails to reach the server. After a fixed time, the server initiates a message checkback for the message.

- After receiving the message checkback, the sender needs to check the final result of the corresponding message local transaction execution.

- The sender resubmits the secondary confirmation according to the final state of the checked local transaction, and the server still operates on the half-transaction message according to step 4.

Best Effort Notification

Similar to the reliable message queue method above, there is another scheme called best effort notification. Its core idea is that the notification initiator makes the best effort to notify the recipient of the business processing result through some mechanism. It is generally implemented through MQ as well.

The differences between it and notification based on reliable messages are as follows:

- Different ideas for solutions

Reliable message consistency requires the notification initiator to ensure that the message is sent out and sent to the notification recipient. The reliability of the message is key to the notification initiator.

Best effort notification means the notification initiator makes the best effort to notify the recipient of the business processing result. However, the message may not be received. At this time, the recipient needs to actively call the notification initiator’s interface to query the business processing result. The reliability of the notification is key to the recipient.

- Different application scenarios for both

Reliable message consistency focuses on transaction consistency during transactions, completing transactions asynchronously.

Best effort notification focuses on post-transaction notification transactions, reliably notifying transaction results.

- Different technical solutions

Reliable message consistency needs to solve the consistency from sending to receiving messages, that is, the message is sent and received.

The best effort notification cannot ensure consistency from sending to receiving messages, it only provides a reliability mechanism for receiving messages. The reliability mechanism is to notify the recipient as much as possible, and when the message cannot be received by the recipient, the recipient actively queries consumption (business processing result).

Summary

2PC and 3PC are a kind of strong consistency transaction, based on the database level, but there are also some risks of data inconsistency. TCC is a compensating transaction idea that is highly intrusive to the business due to implementation at the business layer. Message compensation-based methods, TCC, and best effort notification are actually flexible transactions that ensure eventual consistency and allow data inconsistencies at some point in time.

This article is a bit frivolous, in fact it just talks about some concepts, and a lot is excerpted from other blogs and the “Distributed Technology Principles and Practices 45 Lectures” course from Lagou Education. Just treat it as reading notes to expand the understanding of some basic concepts of distributed transactions.